Apache Tika

Apache Tika is a library for extracting text from most file formats, including PDF, DOC, and PPT. Tika has a simplified interface that extracts the content, making it easy to operate the library. Its main uses are related to the indexing process in search engines, content analysis (journalism, for example), and even translation (using paid APIs).

The content analysis includes metadata extraction. Metadata is information that describes a resource, in the case of Tika, a file. They are data about the data, such as creation date, language, format, permissions, subject, authors, title, and keywords. The more metadata available, the more accurate the analysis of the file’s content will be.

Written in Java, Tika is a popular library, easy to use, and continuously updated. That is why it is used in several other software, such as Apache Solr. Tika is a FOSS (free and open-source software); thus, its API is extensible for you to create your customized functionalities.

In addition to extracting content, Tika’s features include:

- Tika Server: makes its resources available via the RESTful API, which will be the subject of this article;

- Identifies the MIME type, with the pattern

type/subtype, for exampleimage/png; - Identifies metadata: for example, in a PDF the metadata is

pdf:PDFVersion,access_permission,language,dc:formatandCreation-Date(more details below); - Identifies the language of the text;

- Text translation: via the paid APIs Microsoft Translator Text, Google Cloud Translation or Lingo24;

- OCR: integrated with Tesseract OCR to extract content from images.

Advanced features

At the beginning of the project, Tika only did text extraction; however, in the most recent versions it was integrated with other libraries for more advanced uses (not detailed in this text):

- Computer vision: for example the generation of image captions;

- Machine learning (ML): integration with ML tools, such as TensorFlow and Mahout;

- Natural language processing (NLP): integration with NLP tools, such as OpenNLP and NLTK.

Tika Server

In this article, we will see how to use Tika Server and how we can take advantage of the features through its RESTful API. The installation will use via Docker.

The choice of Tika Server with Docker brings scalability to the solution. That is, for small loads, we can use only one or two Docker containers, increasing according to the demand.

In the examples, we will run two containers, one with OCR enabled and one without OCR. This design choice is important because OCR demands a lot of processing and can degrade application performance. In applications with high demand for OCR, it is essential to have a set of separated containers just to process the images. With that, the flow can is redirected to each container according to the need.

Installation via Docker

The Docker image for the examples is available on the apache/tika Docker Hub, and the latest version is 1.24, which uses Java 11. We started by downloading version 1.24 (without OCR) and 1.24-full (with OCR).

docker pull apache/tika:1.24

docker pull apache/tika:1.24-fullTo start the containers we use the following commands:

docker run -it \

--name tika-server-ocr \

-d \

-p 9998:9998 \

apache/tika:1.24-full

docker run -it \

--name tika-server \

-d \

-p 9997:9998 \

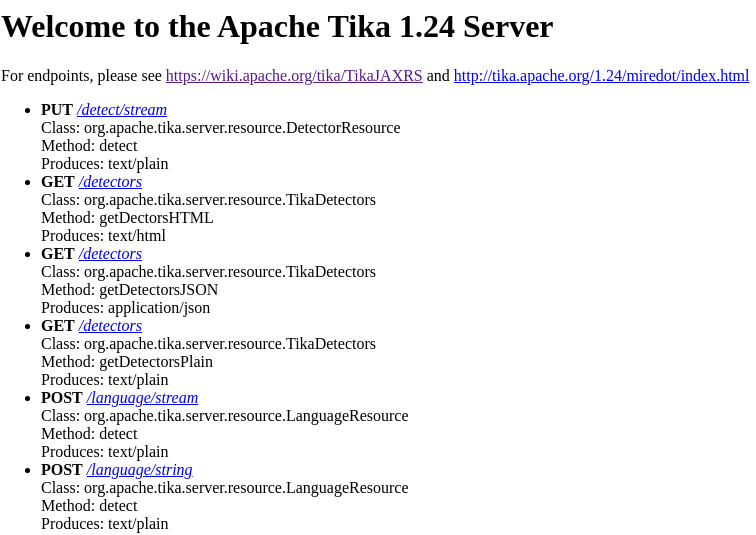

apache/tika:1.24As seen, the server with OCR is on port 9997, and the server without OCR is on port 9997. With this decision, we can choose whether or not to OCR the images. We can check if the servers are running through the URLs http://localhost:9998/ and http://localhost:9997/, as seen in the following image.

To see the server log we use the command docker logs tika-server-ocr and the result should be something like this, showing that the service is available on port 9998, in the case of the server with OCR:

May 02, 2020 3:38:37 PM org.apache.tika.config.InitializableProblemHandler$3 handleInitializableProblem

WARNING: org.xerial's sqlite-jdbc is not loaded.

Please provide the jar on your classpath to parse sqlite files.

See tika-parsers/pom.xml for the correct version.

INFO Starting Apache Tika 1.24 server

INFO Setting the server's publish address to be http://0.0.0.0:9998/

INFO Logging initialized @1831ms to org.eclipse.jetty.util.log.Slf4jLog

INFO jetty-9.4.24.v20191120; built: 2019-11-20T21:37:49.771Z; git: 363d5f2df3a8a28de40604320230664b9c793c16; jvm 11.0.6+10-post-Ubuntu-1ubuntu118.04.1

INFO Started ServerConnector@7fc44dec{HTTP/1.1,[http/1.1]}{0.0.0.0:9998}

INFO Started @1924ms

WARN Empty contextPath

INFO Started o.e.j.s.h.ContextHandler@5bda80bf{/,null,AVAILABLE}

INFO Started Apache Tika server at http://0.0.0.0:9998/Languages in Tesseract OCR

In the standard installation, the languages available in Tesseract are English (default), French, German, Italian, and Spanish. To add new ones, we need to access the container terminal through Docker and execute the following commands to install, for example, the Portuguese language. The correct choice of the text language allows greater precision in character recognition.

docker exec -it tika-server-ocr /bin/bash

apt-get update

apt-get install tesseract-ocr-porHTTP codes

The HTTP codes used on the Tika Server are:

- 200 Ok: requisition completed successfully;

- 204 No content: request completed successfully and empty result;

- 422 Unprocessable Entity: Unsupported Mime-type, encrypted document, etc.;

- 500 Error: error to process the document.

Metadata

The first example is extracting metadata from a PDF. We will use the Linux application curl and some test files in PDF, DOCX, ODT, TXT, PNG, and JPG format.

curl -T test.pdf http://localhost:9998/metaEach file type has a list of different metadata. To show specific metadata, for example, Content-type, use the URL:

curl -T test.pdf http://localhost:9998/meta/Content-TypeYou can choose the format of the result. According to the documentation available at http://localhost:9998/, we can choose between text, CSV, and JSON. To show the output in plain text, use the URL:

curl -T test.pdf http://localhost:9998/meta/Content-Type --header "Accept: text/plain"To get the result of the X-Parsed-By metadata in CSV:

curl -T test.pdf http://localhost:9998/meta/X-Parsed-By --header "Accept: text/csv"Finally, to get the result of the Creation-Date metadata in JSON:

curl -T test.odt http://localhost:9998/meta/Creation-Date --header "Accept: application/json"

Text extraction

Text extraction is the main feature of Tika. To extract the contents of the file test.docx with the Tika server, use the URL:

curl -T test.docx http://localhost:9998/tikaIn the previous case, Tika identifies the file type before selecting the appropriate parser. If you know the file type, Tika can directly choose the proper parser.

curl -T test.pdf http://localhost:9998/tika --header "Content-type: application/pdf"For large files, Tika supports multipart:

curl -F upload=@test.pdf http://localhost:9998/tika/formMIME types

The MIME types supported by Tika are: application, audio, chemical, image, message, model, multipart, text, video, and x-conference. The complete list can are available at the URL http://localhost:9998/mime-types.

OCR

Tika integrates with Tesseract OCR to extract content from images. The simplest way to OCR a PNG file is:

curl -T test.png http://localhost:9998/tikaAgain, if you know the file type and language, we can indicate the Content-type. To change the OCR language, for example to Portuguese, use the X-Tika-OCRLanguage parameter:

curl -T test.jpg http://localhost:9998/tika \

--header "Content-type: image/jpeg" \

--header "X-Tika-OCRLanguage: por"Identify language

To identify the language of the text of a file, we can use the URL:

curl -T test.odt http://localhost:9998/language/streamTo identify a text the URL is:

curl -X PUT --data "yo no hablo español muy bien" http://localhost:9998/language/stringConclusion

Apache Tika is a useful project, and Tika Server adds an extra layer of ease with the RESTful API. Thus, it is possible to access Tika’s functionalities from virtually any programming language through web services. With Docker, the solution can handle different workloads. In the example shown, two containers were created, one with OCR support and the other without it, so that the application can choose whether or not it is necessary to OCR the image.

Reference

https://cwiki.apache.org/confluence/display/TIKA/TikaServer

https://cwiki.apache.org/confluence/display/TIKA/TikaOCR

https://opensourceconnections.com/blog/2019/11/26/tika-and-tesseract-outside-of-solr/

There’s a typo in the article:

“As seen, the server with OCR is on port 9997, and the server without OCR is on port 9997.”

It should be port 9998 for tika with OCR